Deploying Machine Learning Models: A Step-by-Step Guide

In the fast-paced world of technology, the deployment of machine learning models is a critical process that enables the practical application of advanced algorithms. This step-by-step guide will provide valuable insights into deploying machine learning models effectively.

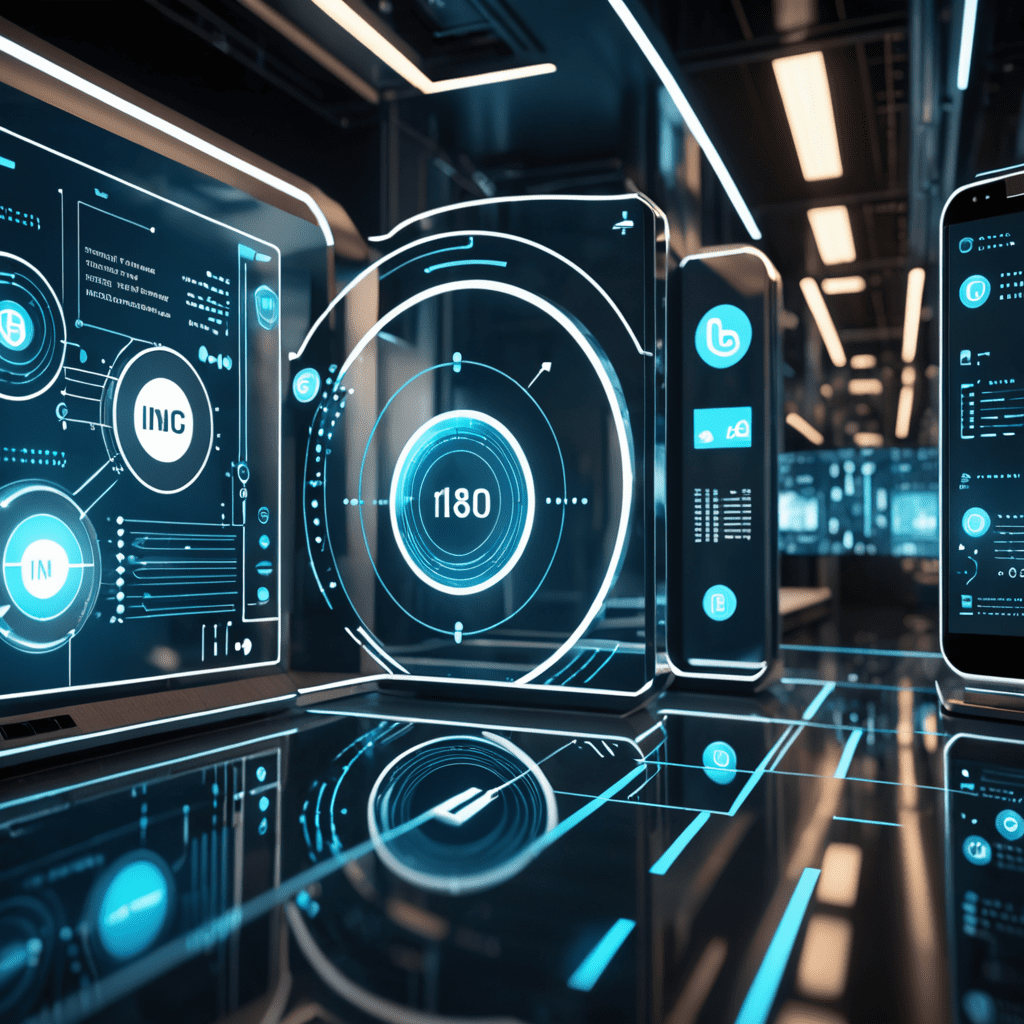

Understanding Model Deployment

Before diving into the deployment process, it’s essential to understand what model deployment entails. This section will delve into the purpose of deploying machine learning models and its significance in real-world applications.

Preparing the Model for Deployment

Before deploying a machine learning model, thorough preparation is necessary. This section will outline the steps involved in preparing the model for deployment, including data preprocessing, feature engineering, and model validation.

Selecting the Deployment Environment

Choosing the right deployment environment is crucial for the successful implementation of machine learning models. This section will discuss various deployment options, such as cloud-based platforms, edge computing, and on-premises deployment.

Implementing the Deployment Process

Once the model is prepared and the deployment environment is selected, it’s time to implement the deployment process. This section will provide a step-by-step guide to deploying machine learning models, including model serving, versioning, and monitoring.

Ensuring Model Maintenance and Updates

After deployment, continuous maintenance and updates are essential to ensure the optimal performance of machine learning models. This section will cover best practices for model maintenance, retraining strategies, and handling concept drift.

FAQ

What are the common challenges in deploying machine learning models?

Deploying machine learning models can present various challenges, such as infrastructure scalability, version control, and adapting to dynamic data patterns. Overcoming these challenges requires careful planning and a robust deployment strategy.

How can model performance be monitored post-deployment?

Monitoring model performance involves tracking key metrics, such as accuracy, precision, and recall, in real-time. Additionally, anomaly detection techniques can be utilized to identify deviations in model behavior and trigger proactive maintenance.

What is the role of containerization in model deployment?

Containerization, through platforms like Docker and Kubernetes, streamlines the deployment process by creating portable, isolated environments for running machine learning models. It enhances scalability, resource utilization, and reproducibility in deployment workflows.